Towards Principled Evaluations of Graph-Learning Datasets

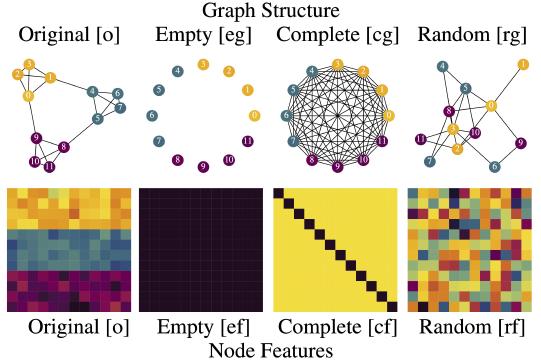

Benchmark datasets have proved pivotal to the success of graph learning, and good benchmark datasets are crucial to guide the development of the field. Recent research has highlighted problems with graph-learning datasets and benchmarking practices – revealing, for example, that methods which ignore the graph structure can outperform graph-based approaches on popular benchmark datasets. Such findings raise two questions: (1) What makes a good graph-learning dataset, and (2) how can we evaluate dataset quality in graph learning? Our work addresses these questions. As the classic evaluation setup uses datasets to evaluate models, it does not apply to dataset evaluation. Hence, we start from first principles. Observing that graph-learning datasets uniquely combine two modes – the graph structure and the node features – , we introduce RINGS, a flexible and extensible mode-perturbation framework to assess the quality of graph-learning datasets based on dataset ablations – i.e., by quantifying differences between the original dataset and its perturbed representations. Within this framework, we propose two measures – performance separability and mode complementarity – as evaluation tools, each assessing, from a distinct angle, the capacity of a graph dataset to benchmark the power and efficacy of graph-learning methods. We demonstrate the utility of our framework for graph-learning dataset evaluation in an extensive set of experiments and derive actionable recommendations for improving the evaluation of graph-learning methods. Our work opens new research directions in data-centric graph learning, and it constitutes a first step toward the systematic evaluation of evaluations.

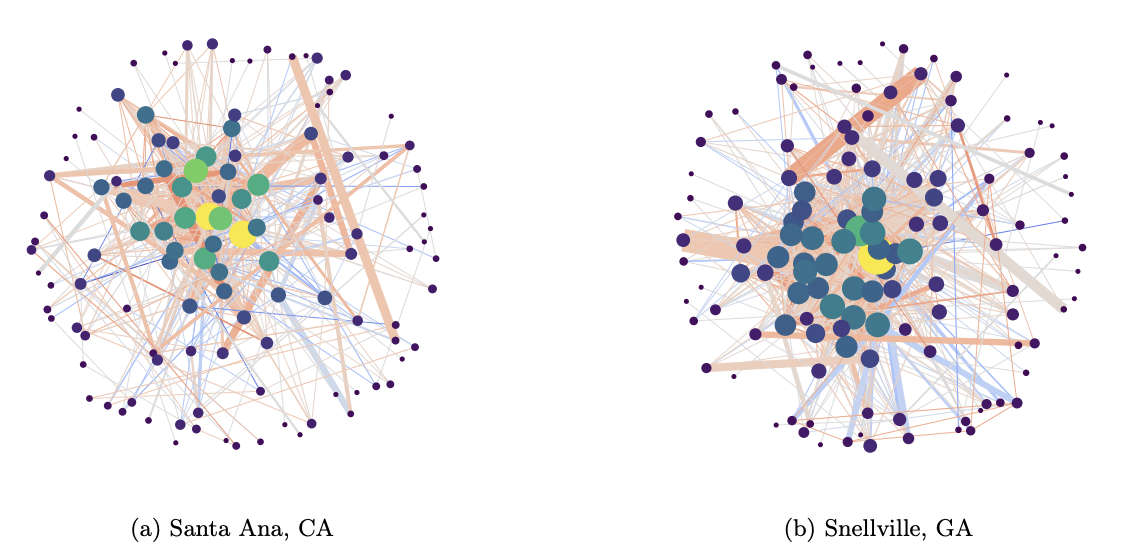

Analyzing Physician-Patient Referral Network Topology

APPARENT is an interactive dashboard for analyzing healthcare networks. By providing access to precomputed descriptions of patient referral networks, we aim to help users better understand, analyze, and improve care delivery in the United States. Our mission is to put advanced analytical methods from graph theory, network science, and machine learning at the fingertips of healthcare professionals. Use APPARENT to explore local or global trends in referral networks with our built-in dashboards, or design your own SQL queries to directly access the underlying database. For more advanced users, we also maintain the APPARENT Python library for custom construction and analysis of referral networks.

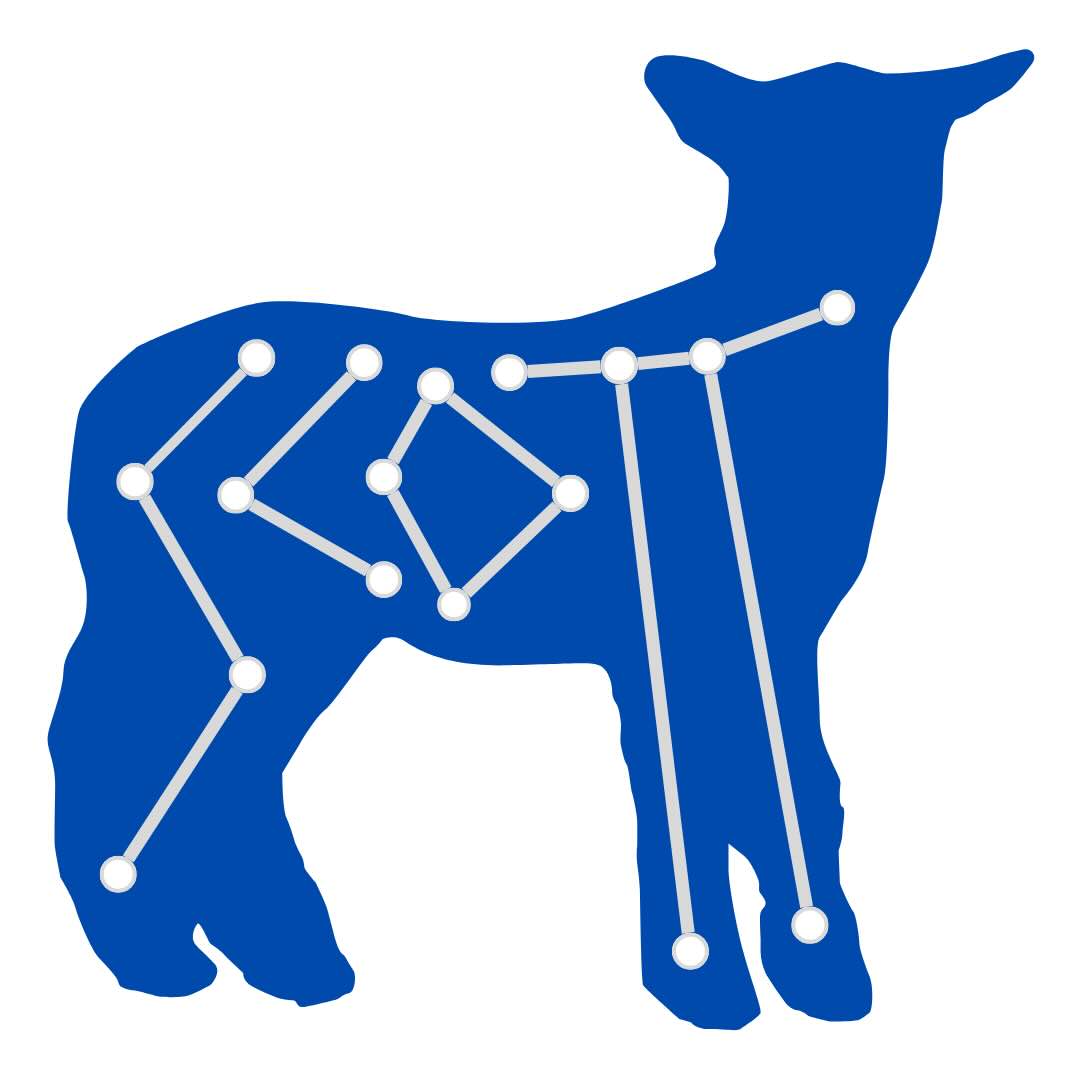

Synthesizing Curvature Operations & Topological Tools

SCOTT is a Python package for computing curvature filtrations for graphs and graph distributions. Our method introduces a novel way to compare graph distributions by combining discrete curvature on graphs with persistent homology, providing descriptors of graph sets that are: robust, stable, expressive, and compatible with statistical testing.